A Microcontroller (also MCU or µC) is a computer-on-a-chip. It is a type of microprocessor emphasizing high integration, low power consumption, self-sufficiency and cost-effectiveness, in contrast to a general-purpose microprocessor (the kind used in a PC).

Microcontrollers are frequently used in automatically controlled products and devices, such as automobile engine control systems, remote controls, office machines, appliances, robotics power tools, and toys. By reducing the size, cost, and power consumption compared to a design using a separate microprocessor, memory, and input/output devices, microcontrollers make it economical to electronically control many more processes.

Embedded design:

The majority of computer systems in use today are embedded in other machinery, such as telephones, clocks, appliances, and vehicles. An embedded system may have minimal requirements for memory and program length. Input and output devices may be discrete switches, relays, or solenoids. An embedded controller may lack any human-readable interface devices at all. For example, embedded systems usually don't have keyboards, screens, disks, printers, or other recognizable I/O devices of a personal computer. Microcontrollers may control electric motors, relays or voltages, and may read switches, variable resistors or other electronic devices.

Programming Environments:

Microcontrollers were originally programmed only in assembly language, but various high-level programming languages are now also in common use to target microcontrollers. These languages are either designed specially for the purpose, or versions of general purpose languages such as the C programming language. Compilers for general purpose languages will typically have some restrictions as well as enhancements to better support the unique characteristics of microcontrollers.

Interpreter firmware is also available for some microcontrollers. The Intel 8052 and Zilog Z8 were available with BASIC very early on, and BASIC is more recently used in the BASIC Stamp MCUs.Some microcontrollers have environments to aid developing certain types of applications, e.g. Analog Device's Blackfin processors with the LabVIEW environment and its programming language "C".

Simulators are available for some microcontrollers, such as in Microchip's MPLAB environment. These allow a developer to analyse what the behaviour of the microcontroller and their program should be if they were using the actual part. A simulator will show the internal processor state and also that of the outputs, as well as allowing input signals to be generated. While on the one hand most simulators will be limited from being unable to simulate much other hardware in a system, they can exercise conditions that may otherwise be hard to reproduce at will in the physical implementation, and can be the quickest way to debug and analyse problems.

Recent microcontrollers integrated with on-chip debug circuitry accessed by In-circuit emulator via JTAG enables a programmer to debug the software of an embedded system with a debugger.

Use of MICROCONTROLLERS in ROBOTIC’S depends on the requirement.

Click On This Link To Get A List Of Different MICROCONTROLLER’S Manufactured By Differnt Companies

Few of the Companies which manufacture MICROCONTROLLER’S are stated below:

1) ATMEL Corporation: It is a manufacturer of semiconductors, founded in 1984. Its focus is on system-level solutions built around flash microcontrollers. Its products include microcontrollers (including 8051 derivatives and AT91SAM and AT91CAP ARM-based micros), and its own ATMEL AVR and AVR32 architectures, radio frequency (RF) devices, EEPROM and Flash memory devices (including DataFlash-based memory), and a number of application-specific products. ATMEL supplies its devices as standard products, ASICs, or ASSP's depending on the requirements of its customers. In some cases it is able to offer system on chip solutions.

ATMEL serves a range of application segments including consumer, communications, computer networking, industrial, medical, automotive, aerospace and military. It is an industry leader in secure systems, notably for the smart card market.

Few of ATMEL Microcontroller’s

• AT89 series (Intel 8051 architecture)

• AT90, ATtiny, ATmega series (AVR architecture) (Atmel Norway design)

• AT91SAM (ARM architecture)

• AVR32 (32-bit AVR architecture)

• MARC4

2)Cypress MicroSystems :

(CMS) (not to be mistaken with the cypress tree) markets high-performance, field Programmable System-on-a-Chip (PSoC) integrated M8 micro-based solutions. CMS is based in Lynnwood, near Seattle, Washington and was established as a subsidiary of Cypress Semiconductor Corporation in the fourth quarter of 1999. Who's one of the best engineer is Bert Sullam. Now cypresses micro chips has grown from a small simple chip too one of the best and highest quality micro chips now you can find it any where.

Cypress MicroSystems was purchased by Cypress Semiconductor in the 4th quarter of 2005 consistent with the original business plan of the start up.

More Updates Soon Please Bear For Mean Time And If Any Specific Request Please A Comment.

Friday, March 28, 2008

Microcontrollers

Monday, March 24, 2008

Artificial Brain

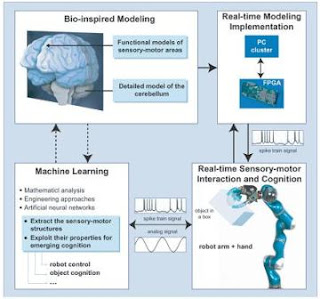

The objective of this research is that The Artificial Brain has to provide the robot with abilities to perceive an environment, to interact with humans, to make intelligent decision and to learn new skills.

An international team of European researchers has implanted an artificial cerebellum — the portion of the brain that controls motor functions — inside a robotic system. This EU-funded project is dubbed SENSOPAC, an acronym for ‘SENSOrimotor structuring of perception and action for emerging cognition.’

One of the goals of this project is to design robots able to interact with humans in a natural way. This project, which should be completed at the end of 2009, also wants to produce robots which would act as home-helpers for disabled people, such as persons affected by neurological disorders, such as Parkinson’s disease.

The European SENSOPAC project started on January 1, 2006 and will take 4 years to be completed. The 12 organizations participating to the project come from 9 different countries and have provided physicists, neuroscientists and electronic engineers.

The microchips which incorporate a full neuronal system have been designed at the University of Granada, Spain. “Implanting the man-made cerebellum in a robot will allow it to manipulate and interact with other objects with far greater effectiveness than previously managed.

‘Although robots are increasingly more important to our society and have more advanced technology, they cannot yet do certain tasks like those carried out by mammals,’ says Professor Eduardo Ros Vidal, who is coordinating the work at the University of Granada. ‘We have been talking about humanoids for years but we do not yet see them on the street or use the unlimited possibilities they offer us,’ the Professor added.”

Also

A team of South Korean scientists has made a breakthrough in developing an artificial brain system, which enables a robot to make a decision in tune with different situations.

The team, headed by Prof. Kim Jong-hwan at the Korea Advanced Institute of Science and Technology, said Thursday that they are trying to incorporate the system to physical robots.

The brain system is a software robot, called sobot, which imitates the thinking mechanism architecture of human brains from sensing to decision-making and behaviors.

Kim said the new-fangled software robot is the world's first one that can make a decision based on contexts, or check surroundings before opting on how to behave.

''Let me take an example. When an owner returns home, a robot is charging its battery. Then, it is supposed to stop consuming electricity and greet the human,'' Kim said.

``When the machine charged itself enough, it will know so. But if its battery runs low, it will select to remain being plugged in. In other words, it thinks close to a human,'' he said.

Emulating the human brain, the software robot is composed of six modules for as many functions perception, context awareness, internal status, memory, behavior and actuator.

``We will continue to put forth efforts to enhance the capacity of software robots in analyzing the environment and reaching conclusions before taking actions,'' Kim said. ``Such attempts will eventually lead to the development of human-like robots.''

KT, the country's predominant telecom operator, already recognized the exponential potential of the sophisticated system.

Beginning next month, Kim's team will carry out a joint research program together with KT regarding the smart software architecture.

Kim is well known globally for founding and leading the Federation of International Robot Soccer, where robotic drones duke it out on the pitch.

He also gained prominence in late 2004 by unveiling a software robot, which is programmed with 14 artificial chromosomes that control a total of 77 behaviors.

According to Kim, the software robot, which he claims evolves through crossover and mutation, can offer personality to robot hardware.

Wednesday, March 19, 2008

Interfaces

Having discussed about Embedded Robotic Controllers in my previous post its now time to discuss about the Interfaces.

A number of interfaces are available on most embedded systems. These are

digital inputs, digital outputs, and analog inputs. Analog outputs are not

always required and would also need additional amplifiers to drive any actuators.

Instead, DC motors are usually driven by using a digital output line and a

pulsing technique called “pulse width modulation” (PWM).

The Motorola M68332 microcontroller already provides a number of

digital I/O lines, grouped together in ports. We are utilizing these CPU ports as can be seen in IMAGE below.

But it also provide additional digital I/O pins through latches.Most important is the M68332’s TPU. This is basically a second CPU integrated on the same chip, but specialized to timing tasks. It simplifies tremendously many time-related functions, like periodic signal generation or pulse counting, which are frequently required for robotics applications.

First 2 pictures above shows the EyeCon board with all its components and interface connections from the front and back. Our design objective was to make the construction of a robot around the EyeCon as simple as possible. Most interface connectors allow direct plug-in of hardware components. No adapters or special cables are required to plug servos, DC motors, or PSD sensors into the EyeCon. Only the HDT software needs to be updated by simply downloading the new configuration from a PC; then each user program can access the new hardware.

The parallel port and the three serial ports are standard ports and can be used to link to a host system, other controllers, or complex sensors/actuators.Serial port 1 operates at V24 level, while the other two serial ports operate at TTL level.

The Motorola background debugger (BDM) is a special feature of the M68332 controller. Additional circuitry is included in the EyeCon, so only a cable is required to activate the BDM from a host PC. The BDM can be used to debug an assembly program using breakpoints, single step, and memory or register display. It can also be used to initialize the flash-ROM if a new chip is inserted or the operating system has been wiped by accident.

At The University of Western Australia, they are using a stand-alone, boxed version of the EyeCon controller for lab experiments in the Embedded Systems course. They are used for the first block of lab experiments until we switch to the EyeBot Labcars.

Monday, March 17, 2008

Embedded Robotic Controllers

The centerpiece of all our robot designs is a small and versatile embedded controller

that each robot carries on-board. We called it the “EyeCon” (EyeBot

controller, Figure 1.6), since its chief specification was to provide an interface

for a digital camera in order to drive a mobile robot using on-board image

processing [Bräunl 2001].

Robots and Controllers

The EyeCon is a small, light, and fully self-contained embedded controller.

It combines a 32bit CPU with a number of standard interfaces and drivers for

DC motors, servos, several types of sensors, plus of course a digital color camera.

Unlike most other controllers, the EyeCon comes with a complete built-in

user interface: it comprises a large graphics display for displaying text messages

and graphics, as well as four user input buttons. Also, a microphone and

a speaker are included. The main characteristics of the EyeCon are:

EyeCon specs • 25MHz 32bit controller (Motorola M68332)

• 1MB RAM, extendable to 2MB

• 512KB ROM (for system + user programs)

• 1 Parallel port

• 3 Serial ports (1 at V24, 2 at TTL)

• 8 Digital inputs

• 8 Digital outputs

• 16 Timing processor unit inputs/outputs

• 8 Analog inputs

• Single compact PCB

• Interface for color and grayscale camera

• Large graphics LCD (12864 pixels)

• 4 input buttons

• Reset button

• Power switch

• Audio output

• Piezo speaker

• Adapter and volume potentiometer for external speaker

• Microphone for audio input

• Battery level indication

• Connectors for actuators and sensors:

• Digital camera

• 2 DC motors with encoders

• 12 Servos

• 6 Infrared sensors

• 6 Free analog inputs

One of the biggest achievements in designing hardware and software for the

EyeCon embedded controller was interfacing to a digital camera to allow onboard real-time image processing. We started with grayscale and color Connectix

“QuickCam” camera modules for which interface specifications were available.

However, this was no longer the case for successor models and it is

virtually impossible to interface a camera if the manufacturer does not disclose

the protocol. This lead us to develop our own camera module “EyeCam” using

low resolution CMOS sensor chips. The current design includes a FIFO hardware

buffer to increase the throughput of image data.

A number of simpler robots use only 8bit controllers [Jones, Flynn, Seiger

1999]. However, the major advantage of using a 32bit controller versus an 8bit

controller is not just its higher CPU frequency (about 25 times faster) and

wider word format (4 times), but the ability to use standard off-the-shelf C and

C++ compilers. Compilation makes program execution about 10 times faster

than interpretation, so in total this results in a system that is 1,000 times faster.

We are using the GNU C/C++ cross-compiler for compiling both the operating

system and user application programs under Linux or Windows. This compiler

is the industry standard and highly reliable. It is not comparable with any of

the C-subset interpreters available.

The EyeCon embedded controller runs our own “RoBIOS” (Robot Basic

Input Output System) operating system that resides in the controller’s flash-

ROM. This allows a very simple upgrade of a controller by simply downloading

a new system file. It only requires a few seconds and no extra equipment,

since both the Motorola background debugger circuitry and the writeable

flash-ROM are already integrated into the controller.

RoBIOS combines a small monitor program for loading, storing, and executing

programs with a library of user functions that control the operation of

all on-board and off-board devices (see Appendix B.5). The library functions

include displaying text/graphics on the LCD, reading push-button status, reading

sensor data, reading digital images, reading robot position data, driving

motors, v-omega (v) driving interface, etc. Included also is a thread-based

multitasking system with semaphores for synchronization. The RoBIOS operating

system is discussed in more detail in Chapter B.

Another important part of the EyeCon’s operating system is the HDT

(Hardware Description Table). This is a system table that can be loaded to

flash-ROM independent of the RoBIOS version. So it is possible to change the

system configuration by changing HDT entries, without touching the RoBIOS

operating system. RoBIOS can display the current HDT and allows selection

and testing of each system component listed (for example an infrared sensor or

a DC motor) by component-specific testing routines.

Figure 1.7 from [InroSoft 2006], the commercial producer of the EyeCon

controller, shows hardware schematics. Framed by the address and data buses

on the top and the chip-select lines on the bottom are the main system components

ROM, RAM, and latches for digital I/O. The LCD module is memory

mapped, and therefore looks like a special RAM chip in the schematics.

Optional parts like the RAM extension are shaded in this diagram. The digital

camera can be interfaced through the parallel port or the optional FIFO buffer.

While the Motorola M68332 CPU on the left already provides one serial port,

we are using an ST16C552 to add a parallel port and two further serial ports to

the EyeCon system. Serial-1 is converted to V24 level (range +12V to –12V)

with the help of a MAX232 chip. This allows us to link this serial port directly

to any other device, such as a PC, Macintosh, or workstation for program

download. The other two serial ports, Serial-2 and Serial-3, stay at TTL level

(+5V) for linking other TTL-level communication hardware, such as the wireless

module for Serial-2 and the IRDA wireless infrared module for Serial-3.

A number of CPU ports are hardwired to EyeCon system components; all

others can be freely assigned to sensors or actuators. By using the HDT, these

assignments can be defined in a structured way and are transparent to the user

program. The on-board motor controllers and feedback encoders utilize the

lower TPU channels plus some pins from the CPU port E, while the speaker

uses the highest TPU channel. Twelve TPU channels are provided with matching

connectors for servos, i.e. model car/plane motors with pulse width modulation

(PWM) control, so they can simply be plugged in and immediately operated.

The input keys are linked to CPU port F, while infrared distance sensors

(PSDs, position sensitive devices) can be linked to either port E or some of the

digital inputs.

An eight-line analog to digital (A/D) converter is directly linked to the

CPU. One of its channels is used for the microphone, and one is used for the

battery status. The remaining six channels are free and can be used for connecting

analog sensors.

Friday, March 14, 2008

Robots Which Walk Like Humans

WASHINGTON D.C. - In what could be described as one small step for a robot, but a giant leap for robot-kind, a trio of humanoid machines were introduced Thursday, each with the ability to walk in a human-like manner.

Each bipedal robot has a strikingly human-like gait and appearance. Arms swing for balance. Ankles push off. Eyeballs are added for effect.

One of the robots, from the Massachusetts Institute of Technology (MIT) is named Toddler for its modest stature and the side-to-side wobble of its stride. Denise, a robot created by researchers at Delft University in the Netherlands, stands about as tall as the average woman.

Smart as a toddler

Toddler is the smart one of the bunch. While the others rely on superb mechanical design, Toddler has a brain with less power than that of an ant, but it is able to learn new terrain, "allowing the robot to teach itself to walk in less than 20 minutes, or about 600 steps," scientists said.

The breakthroughs could change the way humanoid robots are built, and they open doors to new types of robotic prostheses -- limbs for people who have lost them. The robots are also expected to shed light on the biomechanics of human walking.

"These innovations are a platform upon which others will build," said Michael Foster, an engineer at the National Science Foundation (NSF) who oversaw the three projects. "This is the foundation for what we may see in robotic control in the future."

The robots were presented today at a meeting of the American Association for the Advancement of Science (AAAS). They are also discussed in the Feb. 18 issue of the journal Science.

More than a toy

Engineers drew from "passive-dynamic" toys dating back to the 1800s that could walk downhill with the help of gravity. Little progress has been made since on getting robots to walk like people.

The new machines navigate level terrain using as little energy as one-half the wattage of a standard compact fluorescent light bulb. The Cornell robot consumes an amount of energy while walking that is comparable to a strolling human of equal weight.

Toy walkers sway from side to side to get their feet off the ground. Humans minimize the swaying and bend their knees to in order to pick up their feet. The Cornell and Delft robots employ this approach.

"Other robots, no matter how smooth they are in control, work to stand first, then base motions on top of that," said Cornell researcher Andy Ruina. "The robots we have here are based on falling, catching yourself and falling again."

Cornell's robot equals human efficiency because it uses energy only to push off, and then gravity brings the foot down, while other robots needlessly use energy to perform all aspects of their effort.

"The Cornell team's passive mechanism helps greatly reduce the power requirement," said Junku Yuh, an NSF expert on intelligent systems. "Their work is very innovative."

Not perfect yet:

All three robots swing their arms in synch with the opposite leg for balance. In most ways, though, they are not as versatile as other automatons. Honda's Asimo, for example, can walk backward and up stairs. But Asimo requires at least 10 times more power to achieve such feats.

"The real solution lies somewhere in-between the two," said Steven Collins, a University of Michigan researcher who worked on the Cornell robot. "A robot could use passive dynamics for level or downhill motion, then large motors for high-energy needs like climbing stairs, running or jumping."

Collins is applying what's been learned in an effort to develop better prosthetic feet for humans.

"I think that you can't know how the foot should work until you can understand its role in walking," he said.

The squat Toddler robot gains foot clearance only by leaning sideways, a decidedly non-human approach. But Toddler is remarkable for its ability to learn new terrain and adapt its approach, as would a person.

"On a good day, it will walk on just about any surface and adjust its gait," said MIT postdoctoral researcher Russ Tedrake. "We think it's a principle that's going to scale [up] to a lot of new walking robots."

Tuesday, March 11, 2008

Miniature Human Robot

A new humanoid robot,certified as the world's smallest,was released by Japanese toy manufacturer Tomy Company.On OCTOBER 25,2007,the Omnibot I-Sobot is scheduled to hit the market as well as the 2008 edition of Guinness World Record ,which will list the product as "The Smallest Humanoid Robot In Production.Robotics fans look forward to I-Sobot as a fun toy to add to thier collection,but aslo as a leap forward in miniaturization of the advanced parts that go into these high-tech tools.

Surprising Size And Price :

I-sobot stands just 16.5 centimeters tall and weighs only around 350 gms.While the robot fist in the palm of your hand.It remains a fully outfitted bipedal machine,with 17 moving joints.Used throughout the body are tiny,custom servomotors developed by TOMY.The robot's onboard gyro-sensors allows it to maintain its balance automatically as it goes smoothly through its programmed motions.I-Sobot comes with an infrared remote controlunit (as u can see in the picture above),but users can also use Voice commands to control it.Tomy's I-sobot architecture, the control system developed to operate this new robot,makes use of 19 IC(integrated circuit) that works in tandem to enabel the toy's complex action's.

I-Sobot will be sold for 248$ in fully assembled form,complete with rechargeable battries and its remote control, which features twin Joysticks, programmable buttons and an LCD screen. According to the manufacturers, this prince is quite affordable for a robot of this complexity.In addition to its release in JAPAN, the robot will make its way in the U.S.A and else where in ASIA.In 2008 Tomy intends to extend its asles to EUROPE as well.To reach its global sales target of 300,000 units the company is localization I-SOBOT software in English and Chinese in addition to Japanese.

Four Model For Action:

An attractive feature of this versatile robot is its 4 separate models for controlling the action.In RCM(Remote Control Mode),the user manages the robot's movements directly with the command buttons and joysticks on the wirelsee remote.In programming Mode the user has the option to easily choose commands from a lost of available actions-182 in all-or to use the controller to create original actions, or use a combination of the two to program complex sequences that can be upto 240 steps long,with 80 steps stored in each of the robot's 3 memory slots.Special Action Mode, meanwhile includes 18 more complex preprogrammed actions, such as "hula dance" and "air drumming",and Voice Control Mode lets the user give the robot one of 10 commands,to which the I-Sobot can respond with a range of actions.

This robot is entertaining to the ear as well as the eye.As it goes through its actions it plays sounds from its library of nearly 100 sounds effects and songs.The speaker can be turned off,too when silent action is preferable.The toy is HUMANOID in form, but the designers have included playful actions in its repertoire that have it imitate the adorable movements of animals.

Tomy has taken steps to make I-Sobot eco-friendly.The toy manufacturer is shipping the robot with three rechargeable AAA batteries from SANYO Electric Co. whose Eneloop nickel metal hydride batteries let users keep the robot running for months without sending dead batteries to landfills.Tomy is also collaborating in SANYO's Energy Evolution Project by making I-Sobot part of the program carried out at japanese elementary schools.The companies hope to boost children's awareness of environmental issues by powering the fun robot with rechargeable cells.

Monday, March 3, 2008

Robo's in Hospitals

Has it come to this?Robots standing in for doctors at the hospital patients bedside?Not exactly,but some doctors have found a way to use a video conferencing robot to check on patients while they are miles away from the hospital.

At Baltimore's Sinai Hospitaloutfitted with cameras ,a screen and a microphone,joystick controlled robot is guided into the rooms of Dr Alex Gandas patients where he speaks to them as if he were right there."The system allows you to be anywhere in the hospital from anywhere in the world"says the surgeon who specialises in weight loss surgery. CLICK ON IMAGE FOR BETTER UNDERSTANDING

CLICK ON IMAGE FOR BETTER UNDERSTANDING

Besides his normal morning and afternoon in person rounds,Gandsas uses the$150,000 robot to visit patients at night or when problems arise."they love it.They'b rather see me through the robot"he said of his patients' reaction to the machine.

Gandsas presented the idea to the hospital administrators as a method to more closely monitor patients following weight loss surgery.Gandsas an unpaid member of advisory board for the robot manufacture who has stock options in the company, added that since its introduction the length of stay has been shorter for the patients visited by the ROBOT.

A chart review study of 376 doctors' patients found that the 92 patients who had additional robotic visits had shorter hospital stays.Gandsas study appears in the july issue of the Journal Of The American College Of Surgeons.

Nicknamed Bari for the bariatic surgery Gandsas practices, the RP-7 Remote Presence Robotic System by InTouch Technologiesis one of a number of robotic devices finding their way into the medical world.About 180 of the robots are in use in hospitals world wide.

A similar robot is used for Teleconference with a translater for doctor who dont speak their patient language.Robotic devices have also been used to guide stroke patients through therapy.

Nurse Florence Ford, who has worked with the robot since it was introduced about 18 months ago,said patients have reacted well, particularly because "seeing the doctors faces gives them confidence".

Sensors Based Robot Control

Hey everyone....in this post u will find about the sensors used in ROBOTICS and their classification

Robotics has matured as a system integration engineering field defined by M.

Bradley as “the intelligent connection of the perception to action”. Programmable

robot manipulators provide the “action” component. A variety of sensors and

sensing techniques are available to provide the “perception”.

**ROBOTIC SENSING**

Since the “action” capability is physically interacting with the environment, two

types of sensors have to be used in any robotic system:

1)proprioceptors: -> for the measurement of the robot’s (internal) parameters;

2)exteroceptors: -> for the measurement of its environmental (external, from the robot point of view) parameters.

Data from multiple sensors may be further fused into a common representational

format (world model). Finally, at the perception level, the world model is

analyzed to infer the system and environment state, and to assess the

consequences of the robotic system’s actions.

Proprioceptors:

From a mechanical point of view a robot appears as an articulated structure

consisting of a series of links interconnected by joints. Each joint is driven by an

actuator which can change the relative position of the two links connected by that

joint. Proprioceptors are sensors measuring both kinematic and dynamic

parameters of the robot. Based on these measurements the control system

activates the actuators to exert torques so that the articulated mechanical

structure performs the desired motion.

The usual kinematics parameters are the joint positions, velocities, and

accelerations. Dynamic parameters as forces, torques and inertia are also

important to monitor for the proper control of the robotic manipulators.

CEG 4392 Computer Systems Design Project.

The most common joint (rotary) position transducersare: potentiometers,

synchros and resolvers, encoders, RVDT (rotary variable differential transformer)

and INDUCTOSYN. The most accurate transducers are INDUCTOSYNs (+ 1 arc

second), followed by synchros and resolvers and encoders, with potentionmeters

as the least accurate.

Acceleration sensors are based on Newton’s second law. They are actually measuring the force which produces the acceleration of a known mass. Different types of acceleration transducers are known: stress-strain gage, piezoelectric,capacitive, inductive. Micromechanical accelerometers have been developed. In this case the force is measured by measuring the strain in elastic cantilever beams formed from silicon dioxide by an integrated circuit fabrication technology.

Exteroceptors:

Exteroceptors are sensors that measure the positional or force-type interaction of

the robot with its environment.

Exteroceptors can be classified according to their range as follows:

1)contact sensors

2)proximity (“near to”) sensors

3)far away sensors

1)Contact Sensors:

Contact sensors are used to detect the positive contact between two mating

parts and/or to measure the interaction forces and torques which appear while

the robot manipulator conducts part mating operations. Another type of contact

sensors are the tactile sensors which measure a multitude of parameters of the

touched object surface.

2)Proximity Sensors:

Proximity sensors detect objects which are near but without touching them.

These sensors are used for near-field (object approaching or avoidance) robotic

operations. Proximity sensors are classified according to their operating

principle; inductive, hall effect, capacitive, ultrasonic and optical.

Inductive sensors are based on the change of inductance due to the presence of

metallic objects. Hall effect sensors are based on the relation which exists

between the voltage in a semiconductor material and the magnetic field across

that material. Inductive and Hall effect sensors detect only the proximity of

ferromagnetic objects. Capacitive sensors are potentially capable of detecting

the proximity of any type of solid or liquid materials. Ultrasonic and optical

sensors are based on the modification of an emitted signal by objects that are in

their proximity.

3)Far Away Sensing:

Two types of far away sensors are used in robotics:

1)Range sensors:

Range sensors measure the distance to objects in their operation area. They are

used for

robot navigation,obstacle avoidance,to recover the third dimension

for monocular vision.

Range sensors are based on one of the two principles:

a)Time-of-flight:

Time-of-flight sensors estimate the range by measuring the time elapsed

between the transmission and return of a pulse. Laser range finders and sonar

are the best known sensors of this type.

b)Triangulation:

Triangulation sensors measure range by detecting a given point on the object

surface from two different points of view at a known distance from each other.

Knowing this distance and the two view angles from the respective points to the

aimed surface point, a simple geometrical operation yields the range.

2)vision Range Sensors:

Robot vision is a complex sensing process. It involves extracting, characterizing

and interpreting information from images in order to identify or describe objects in

environment.

A vision sensor (camera) converts the visual information to electrical signals

which are then sampled and quantized by a special computer interface

electronics yielding a digital image. Solid state CCD image sensors have many

advantages over conventional tube-type sensors as: small size, light weight,

more robust, better electrical parameters, which recommends them for robotic

applications

The digital image produced by a vision sensor is a mere numerical array which

has to be further processed till an explicit and meaningful description of the

visualized objects finally results. Digital image processing comprises more steps:

preprocessing, segmentation, description, recognition and interpretation.

Preprocessing techniques usually deal with noise reduction and detail

enhancement. Segmentation algorithms, like edge detection or region growing,

are used to extract the objects from the scene. These objects are then described

by measuring some (preferably invariant) features of interest. Recognition is an

operation which classifies the objects in the feature space. Interpretation is the

operation that assigns a meaning to the ensemble of recognized objects.

Saturday, March 1, 2008

IntroDuction

Hey Friends,My name is PRATIK and this blog belongs to me.

The reason behind making this blog was to help all the people and students who are beginners in this field(ROBOTICS).

Well in this blog you can find various thing related to ROBOTICS. and the best part is which most robotics blogs/site dont offer is free tutorials to make ur own robot.

So please feel free to browse this blog and i will try my best to keep it updated as regularly as possible.........

If interested in Helping other by sharing ur knowledge with other...u can leave a comment with ur email id....and i will surely contact you..

Thank you...........and enjoy browsing our blog